Enter your email address to receive the latest ITVET news, market insights, and useful IT tips.

Embracing cutting-edge technologies like Artificial Intelligence (AI) is vital for businesses to maintain a competitive edge. Microsoft Copilot is a powerful AI tool revolutionising the way we work. However, the power of AI needs to be harnessed wisely.

Safeguarding your data, reputation and client relationships is paramount. Therefore, you need to make sure your systems are ready from a security perspective before implementing Microsoft Copilot.

In this article, we consider potential Microsoft Copilot security risks and how to mitigate them to ensure a successful rollout.

What is Microsoft 365 Copilot?

Microsoft Copilot is an AI assistant that works alongside your 365 apps such as Word, Excel, PowerPoint, Outlook, and Teams.

Microsoft’s 2023 Work Trend Index Report shows that the pace and volume of work have increased exponentially, and people can’t keep up. Microsoft Copilot is a game-changer. It’s a powerful productivity tool that helps automate repetitive and time-consuming tasks. This gives you back time so you can focus on the work that matters most – like growing your business.

How does Copilot work?

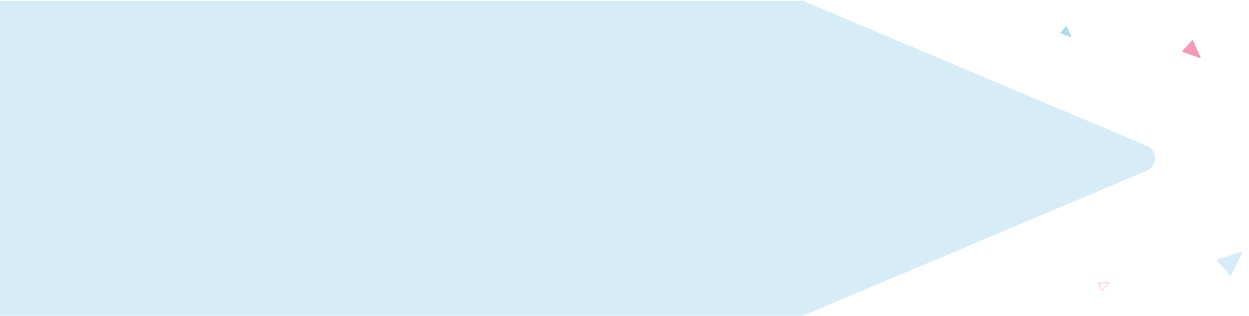

Microsoft Copilot is a sophisticated processing and orchestration engine that works behind the scenes. It leverages the following components to produce relevant results based on your organisational data:

- Large Language Models (LLMs) including GPT-4.

- Your data in the Microsoft Graph such as emails, chats, and documents.

- The Microsoft 365 apps you use every day such as Word and Outlook.

Here’s a simple overview of the process:

- Initial prompt – The user opens the Copilot assistant and inputs a prompt.

- Pre-processing – Copilot looks at your 365 permissions to gather context.

- Modified prompt – A modified prompt is sent to the LLM to work its AI magic.

- Post-processing – The response is put through a responsible AI check.

- Final response – Copilot returns the response to the user so they can review it.

Microsoft Copilot security concerns

While Microsoft Copilot might sound like a dream come true, it’s important to understand the security risks before rolling it out. The Microsoft Copilot security risks mainly relate to the fact that the tool has extensive access to your organisational data. This raises concerns about data security, confidentiality, privacy, and compliance.

Here’s a breakdown of the key Microsoft Copilot data security concerns:

Data exposure

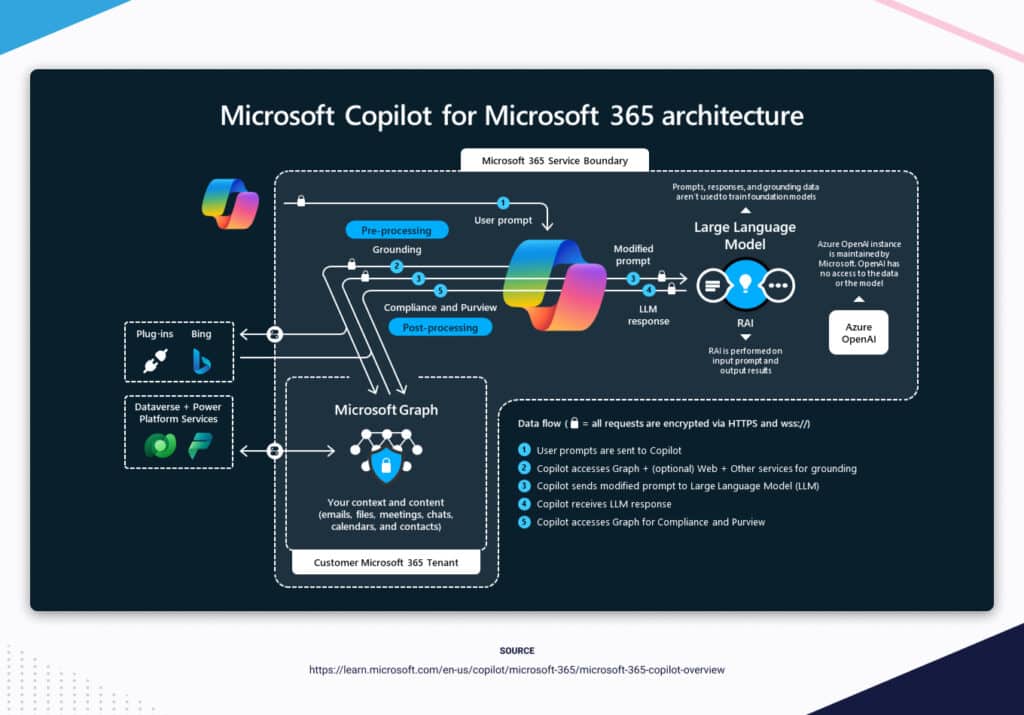

Microsoft Copilot has access to everything the user can access. This is great in theory if the correct access controls are in place. However, as we know all too well, data access tends to be over-permissioned.

This leaves your data vulnerable and could have catastrophic consequences for your business. Lax controls significantly increase the likelihood of a data breach, unauthorised access, and accidental exposure of confidential information.

Employees could potentially generate content with sensitive information they shouldn’t have access to. Then to make matters worse they could share it with a wider group. A common example is people asking Copilot to provide employee salary information and the tool inadvertently sharing the private information with them.

Cross-client data leakage

Companies that serve multiple clients risk cross-client data leakage. For instance, Microsoft Copilot could generate content for one client that contains proprietary information from another. This is a severe violation of client confidentiality that may breach Non-Disclosure Agreements (NDAs) and potentially damage the client relationship.

Lack of labels

Just like your 365 apps, Microsoft Copilot respects your sensitivity labels and their associated protections. Sensitivity labels are a security feature used by Microsoft to classify and protect content based on its sensitivity level. This allows you to protect documents by enforcing encryption, access restrictions, and visual markings based on the sensitivity classification.

Again, this is great in theory, but it relies on proper label management and this isn’t always the case. As employees create more data over time, labels tend to become messy and outdated if they’re not properly managed.

On top of that content generated by Copilot does not always automatically inherit the data source’s labels. Unlabelled content makes it difficult for employees to identify and manage sensitive information. This may cause untracked data breaches and non-compliance with data protection regulations.

Users blindly trusting AI content

Another Microsoft Copilot data security pitfall is that users may fall into the trap of blindly trusting the tool’s output. AI-generated content is not guaranteed to be 100% factual or safe. Neglecting to review the content might result in sensitive or inaccurate information being shared.

Blindly trusting AI content could have severe repercussions for businesses. Not only does it raise data protection and compliance concerns, but it could negatively impact the company’s image with clients and stakeholders.

For example, the finance team might ask Copilot to generate a document containing year end sales data and rather than providing the latest figures the tool may collect older data.

How to ensure a secure Copilot rollout

Safeguarding your data is paramount, so you need to ensure that Microsoft Copilot is implemented correctly.

Here are some ways to ensure a secure Copilot rollout:

Access controls

Controlling what users have access to is crucial for maintaining data security when rolling out Microsoft Copilot. Access to data should be strictly on a need-to-know basis.

- Permission models – Fully utilise the permission models available in Microsoft 365 to ensure the right users have the right access to the right content within your organisation.

- Zero trust principles – When it comes to permissions, the best approach is “never trust, always verify”. You can apply zero trust principles for Copilot across the entire architecture from users and their devices to the data they have access to.

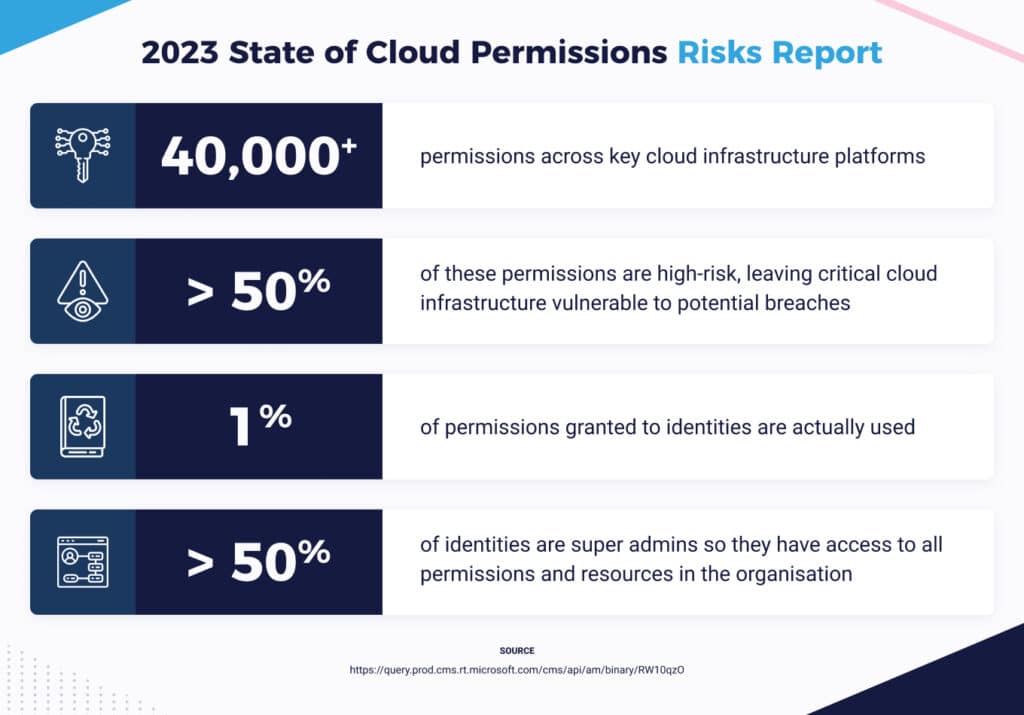

- Sharing policies – Evaluate both internal and external sharing policies for SharePoint and OneDrive. The default setting within Microsoft 365 allows users to share files with anyone either internally or externally. Make sure you update these settings and apply restrictions to avoid oversharing or accidental sharing.

Labels

To protect your sensitive data, you need to ensure that you have proper label management in place. Firstly, you need to have a clearly defined classification strategy, then train employees to systematically classify sensitive data.

Employee training

Most data breaches are caused by an employee’s mistake. As a preventative measure, you should provide employee training, so they fully understand Microsoft Copilot’s capabilities and limitations.

It’s important employees don’t solely rely on Copilot’s output and are cautious when generating content that involves sensitive data. They must take responsibility for checking the Copilot-generated content to ensure that it’s factually accurate and doesn’t leak private information.

Final thoughts

Microsoft Copilot has huge potential to boost productivity and transform your business. However, the transformative power of AI must be harnessed responsibly. By utilising Microsoft’s security features and educating employees, you can mitigate potential Copilot security risks to ensure a successful rollout.

At ITVET, we’re seeing our customers rapidly adopting AI to help reduce costs, increase revenue, and facilitate decision-making. Find out how we can help you maximise the potential of Copilot while safeguarding your data, reputation, and client relationships. Get in touch today for a free consultation with one of our Microsoft experts.